When working with Azure Functions in C# (specifically Azure Functions V2 in this article) you can specify bindings with hard-coded literal values.

For example, the following function has a queue trigger that is reading messages from a queue called “input-queue”, an output queue binding writing messages to “output-queue”, and a blob storage binding to write blobs to “audit/{rand-guid}”:

public static class MakeUppercase

{

[FunctionName("MakeUppercase")]

public static void Run(

[QueueTrigger("input-queue")]string inputQueueItem,

[Queue("output-queue")] out string outputQueueItem,

[Blob("audit/{rand-guid}")] out string outputBlobItem,

ILogger log)

{

inputQueueItem = inputQueueItem.ToUpperInvariant();

outputQueueItem = inputQueueItem;

outputBlobItem = inputQueueItem;

}

}

All these binding values in the preceding code are hard coded, if they need to be changed once the Function App is deployed, a new release will be required.

Specifying Azure Function Bindings in Configuration

As an alternative, the %% syntax can be used inside the binding string:

public static class MakeUppercase

{

[FunctionName("MakeUppercase")]

public static void Run(

[QueueTrigger("%in%")]string inputQueueItem,

[Queue("%out%")] out string outputQueueItem,

[Blob("%blobout%")] out string outputBlobItem,

ILogger log)

{

inputQueueItem = inputQueueItem.ToUpperInvariant();

outputQueueItem = inputQueueItem;

outputBlobItem = inputQueueItem;

}

}

Notice in the preceding code, parts of the binding configuration strings are specified between %%: "%in%", "%out%", and "%blobout%".

At runtime, these values will be read from configuration instead of being hard coded.

Configuring Bindings at Development Time

When running locally, the configuration values will be read from the local.settings.json file, for example:

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"FUNCTIONS_WORKER_RUNTIME": "dotnet",

"in": "input-queue",

"out": "output-queue",

"blobout" : "audit/{rand-guid}"

}

}

Notice the “in”, “out”, and “blobout” configuration elements that map to "%in%", "%out%", and "%blobout%”.

Configuring Bindings in Azure

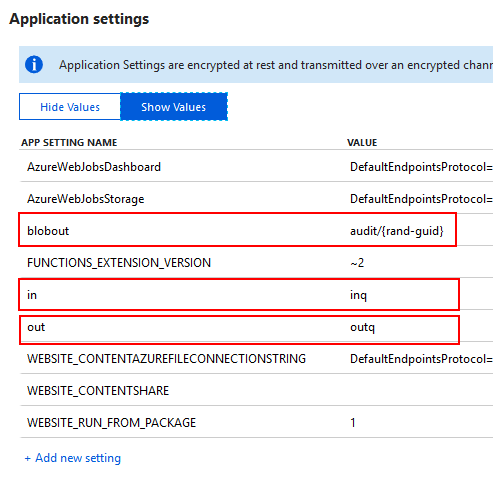

Once deployed and running in Azure, these settings will need to be present in the Function App Application Settings as the following screenshot demonstrates:

Now if you want to modify the queue names or blob path you can simply change the values in configuration. It should be noted that you may have to restart the Function App for the changes to take effect. You will also need to manage the switch to new queues, blobs, etc.such as what to do if after the change there are still some messages in the original input queue, etc, etc.

If you want to fill in the gaps in your C# knowledge be sure to check out my C# Tips and Traps training course from Pluralsight – get started with a free trial.

SHARE: