When moving to Azure Functions or other FaaS offerings it’s possible to fall into the trap of “desktop development’ thinking, whereby a function is implemented as if it were a piece of desktop code. This may negate the benefits of Azure Functions and may even cause function failures because of timeouts. An Azure Function can execute for 5 minutes before being shut down by the runtime when running under a Consumption Plan. This limit can be configured to be longer in the host.json (currently to a mx of 10 minutes). You could also investigate something like Azure Batch.

Non Fan-Out Example

In this initial attempt, a blob-triggered function is created that receives a blob containing a data file. Each line has some processing performed on it (simulated in the following code) and then writes multiple output blobs, one for each processed line.

using System.Threading;

using System.Diagnostics;

public static void Run(TextReader myBlob, string name, Binder outputBinder, TraceWriter log)

{

var executionTimer = Stopwatch.StartNew();

log.Info($"C# Blob trigger function Processed blob\n Name:{name}");

string dataLine;

while ((dataLine = myBlob.ReadLine()) != null)

{

log.Info($"Processing line: {dataLine}");

string processedDataLine = ProcessDataLine(dataLine);

string path = $"batch-data-out/{Guid.NewGuid()}";

using (var writer = outputBinder.Bind<TextWriter>(new BlobAttribute(path)))

{

log.Info($"Writing output line: {dataLine}");

writer.Write(processedDataLine);

}

}

executionTimer.Stop();

log.Info($"Procesing time: {executionTimer.Elapsed}");

}

private static string ProcessDataLine(string dataLine)

{

// Simulate expensive processing

Thread.Sleep(1000);

return dataLine;

}

Uploading a normal sized input data file may not result in any errors, but if a larger file is attempted then you may get a function timeout:

Microsoft.Azure.WebJobs.Host: Timeout value of 00:05:00 was exceeded by function: Functions.ProcessBatchDataFile.

Fan-Out Example

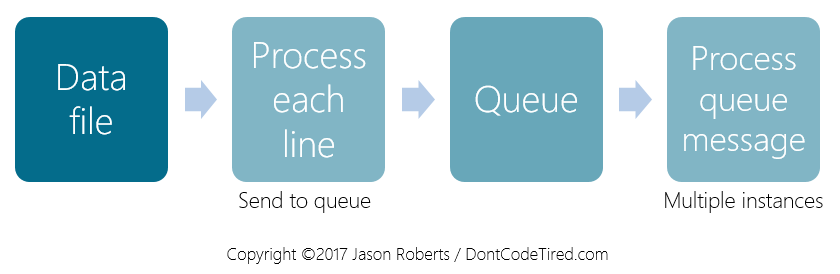

Embracing Azure Functions more, the following pattern can be used, whereby there is no processing in the initial function. Instead the function just divides up each line of the file and puts it on a storage queue. Another function is triggered from these queue messages and does the actual processing. This means that as the number of messages in the queue grows, multiple instances of the queue-triggered function will be created to handle the load.

public async static Task Run(TextReader myBlob, string name, IAsyncCollector<string> outputQueue, TraceWriter log)

{

log.Info($"C# Blob trigger function Processed blob\n Name:{name}");

string dataLine;

while ((dataLine = myBlob.ReadLine()) != null)

{

log.Info($"Processing line: {dataLine}");

await outputQueue.AddAsync(dataLine);

}

}

And the queue-triggered function that does the actual work:

using System;

using System.Threading;

public static void Run(string dataLine, out string outputBlob, TraceWriter log)

{

log.Info($"Processing data line: {dataLine}");

string processedDataLine = ProcessDataLine(dataLine);

log.Info($"Writing processed line to blob: {processedDataLine}");

outputBlob = processedDataLine;

}

private static string ProcessDataLine(string dataLine)

{

// Simulate expensive processing

Thread.Sleep(1000);

return dataLine;

}

When architecting processing this way there are other limits which may also cause problems such as (but not limited to) queue scalability limits.

To learn more about Azure Functions, check out my Pluralsight courses: Azure Function Triggers Quick Start and Reducing C# Code Duplication in Azure Functions.

SHARE: