This is the second part in a series of articles.

Before creating durable functions it’s important to understand the logical types of functions involved. There are essentially 3 logical types of functions:

- Client function: the entry point function, called by the end client to start the workflow, e.g. an HTTP triggered function

- Orchestrator function: defines the workflow and what activity functions to call

- Activity function: the function(s) that do the actual work/processing

When you create a durable function in Visual Studio, the template creates each of these 3 functions for you as a starting point.

Setup Development Environment

The first thing to do is set up your development environment:

- Install Visual Studio 2019 (e.g. the free community version) – when installing, remember to install the Azure development workload as this enables functions development

- Install and check that the Azure storage emulator is running – this allows you to run/test functions locally without deploying to Azure in the cloud

Create Azure Functions Project

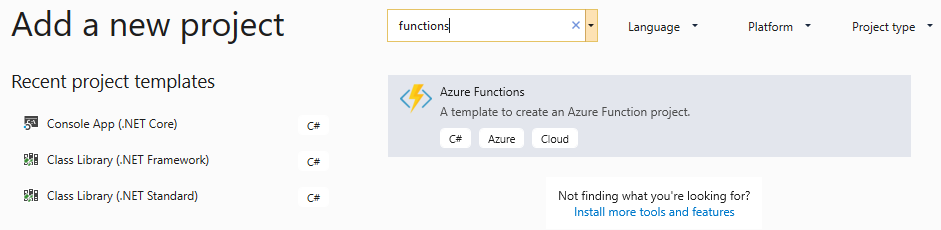

Next, open Visual Studio 2019 and create a new Azure Functions project as the following screenshot shows:

Once the project is created, you add individual functions to it.

At this point you should also manage NuGet packages for the project and update any packages to the latest versions.

Add a Function

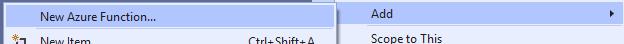

Right click the new project and choose Add –> New Azure Function.

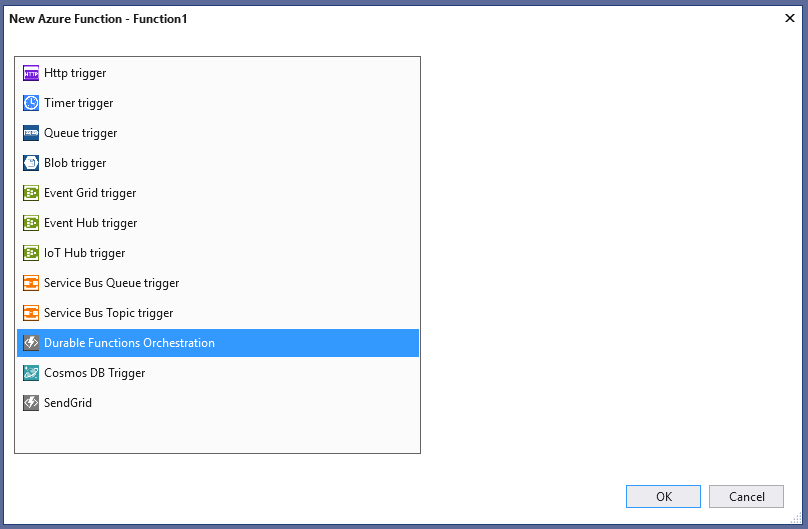

Give the function a name (or leave it as the default “Function1.cs”) and click ok - this will open the function template chooser:

Select Durable Functions Orchestration, and click OK.

This will create the following starter code:

using System.Collections.Generic;

using System.Net.Http;

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.Extensions.Logging;

namespace DontCodeTiredDemosV2.Durables

{

public static class Function1

{

[FunctionName("Function1")]

public static async Task<List<string>> RunOrchestrator(

[OrchestrationTrigger] DurableOrchestrationContext context)

{

var outputs = new List<string>();

// Replace "hello" with the name of your Durable Activity Function.

outputs.Add(await context.CallActivityAsync<string>("Function1_Hello", "Tokyo"));

outputs.Add(await context.CallActivityAsync<string>("Function1_Hello", "Seattle"));

outputs.Add(await context.CallActivityAsync<string>("Function1_Hello", "London"));

// returns ["Hello Tokyo!", "Hello Seattle!", "Hello London!"]

return outputs;

}

[FunctionName("Function1_Hello")]

public static string SayHello([ActivityTrigger] string name, ILogger log)

{

log.LogInformation($"Saying hello to {name}.");

return $"Hello {name}!";

}

[FunctionName("Function1_HttpStart")]

public static async Task<HttpResponseMessage> HttpStart(

[HttpTrigger(AuthorizationLevel.Anonymous, "get", "post")]HttpRequestMessage req,

[OrchestrationClient]DurableOrchestrationClient starter,

ILogger log)

{

// Function input comes from the request content.

string instanceId = await starter.StartNewAsync("Function1", null);

log.LogInformation($"Started orchestration with ID = '{instanceId}'.");

return starter.CreateCheckStatusResponse(req, instanceId);

}

}

}

Notice in the preceding code the 3 types of function: client, orchestrator, and activity.

We can make this a bit clearer by renaming a few things:

using System.Collections.Generic;

using System.Net.Http;

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.Extensions.Logging;

namespace DurableDemos

{

public static class Function1

{

[FunctionName("OrchestratorFunction")]

public static async Task<List<string>> RunOrchestrator(

[OrchestrationTrigger] DurableOrchestrationContext context)

{

var outputs = new List<string>();

// Replace "hello" with the name of your Durable Activity Function.

outputs.Add(await context.CallActivityAsync<string>("ActivityFunction", "Tokyo"));

outputs.Add(await context.CallActivityAsync<string>("ActivityFunction", "Seattle"));

outputs.Add(await context.CallActivityAsync<string>("ActivityFunction", "London"));

// returns ["Hello Tokyo!", "Hello Seattle!", "Hello London!"]

return outputs;

}

[FunctionName("ActivityFunction")]

public static string SayHello([ActivityTrigger] string name, ILogger log)

{

log.LogInformation($"Saying hello to {name}.");

return $"Hello {name}!";

}

[FunctionName("ClientFunction")]

public static async Task<HttpResponseMessage> HttpStart(

[HttpTrigger(AuthorizationLevel.Anonymous, "get", "post")]HttpRequestMessage req,

[OrchestrationClient]DurableOrchestrationClient starter,

ILogger log)

{

// Function input comes from the request content.

string instanceId = await starter.StartNewAsync("OrchestratorFunction", null);

log.LogInformation($"Started orchestration with ID = '{instanceId}'.");

return starter.CreateCheckStatusResponse(req, instanceId);

}

}

}

There are 3 Azure Functions in this single Function1 class.

First the “ClientFunction” is what starts the workflow, in this example it’s triggered by a HTTP call from the client, but you could use any trigger here – for example from a message on a queue or a timer. When this function is called, it doesn’t do any processing itself but rather creates an instance of the workflow that is defined in the "OrchestratorFunction". The line string instanceId = await starter.StartNewAsync("OrchestratorFunction", null); is what kicks off the workflow: the first argument is a string naming the orchestration to start, the second parameter (in this example null) is any input that needs to be passed to the orchestrator. The final line return starter.CreateCheckStatusResponse(req, instanceId); returns an HttpResponseMessage to the HTTP caller.

The second function "OrchestratorFunction" is what defines the activity functions that will comprise the workflow. In this function the CallActivityAsync method defines what activities get executed as part of the orchestration, in this example the same activity "ActivityFunction" is called 3 times. The CallActivityAsync method takes 2 parameters: the first is a string naming the activity function to execute, and the second is any data to be passed to the activity function; in this case hardcoded strings "Tokyo", "Seattle", and "London". Once these activities have completed execution, the result will be returned – a list: ["Hello Tokyo!", "Hello Seattle!", "Hello London!"].

The third function "ActivityFunction" is where the actual work/processing takes place.

Testing Durable Functions Locally

The project can be be run locally by hitting F5 in Visual Studio, this will start the local functions runtime:

%%%%%%

%%%%%%

@ %%%%%% @

@@ %%%%%% @@

@@@ %%%%%%%%%%% @@@

@@ %%%%%%%%%% @@

@@ %%%% @@

@@ %%% @@

@@ %% @@

%%

%

Azure Functions Core Tools (2.7.1373 Commit hash: cd9bfca26f9c7fe06ce245f5bf69bc6486a685dd)

Function Runtime Version: 2.0.12507.0

[9/07/2019 3:29:16 AM] Starting Rpc Initialization Service.

[9/07/2019 3:29:16 AM] Initializing RpcServer

[9/07/2019 3:29:16 AM] Building host: startup suppressed:False, configuration suppressed: False

[9/07/2019 3:29:17 AM] Initializing extension with the following settings: Initializing extension with the following settings:

[9/07/2019 3:29:17 AM] AzureStorageConnectionStringName: , MaxConcurrentActivityFunctions: 80, MaxConcurrentOrchestratorFunctions: 80, PartitionCount: 4, ControlQueueBatchSize: 32, ControlQueueVisibilityTimeout: 00:05:00, WorkItemQueueVisibilityTimeout: 00:05:00, ExtendedSessionsEnabled: False, EventGridTopicEndpoint: , NotificationUrl: http://localhost:7071/runtime/webhooks/durabletask, TrackingStoreConnectionStringName: , MaxQueuePollingInterval: 00:00:30, LogReplayEvents: False. InstanceId: . Function: . HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 0.

[9/07/2019 3:29:17 AM] Initializing Host.

[9/07/2019 3:29:17 AM] Host initialization: ConsecutiveErrors=0, StartupCount=1

[9/07/2019 3:29:17 AM] LoggerFilterOptions

[9/07/2019 3:29:17 AM] {

[9/07/2019 3:29:17 AM] "MinLevel": "None",

[9/07/2019 3:29:17 AM] "Rules": [

[9/07/2019 3:29:17 AM] {

[9/07/2019 3:29:17 AM] "ProviderName": null,

[9/07/2019 3:29:17 AM] "CategoryName": null,

[9/07/2019 3:29:17 AM] "LogLevel": null,

[9/07/2019 3:29:17 AM] "Filter": "<AddFilter>b__0"

[9/07/2019 3:29:17 AM] },

[9/07/2019 3:29:17 AM] {

[9/07/2019 3:29:17 AM] "ProviderName": "Microsoft.Azure.WebJobs.Script.WebHost.Diagnostics.SystemLoggerProvider",

[9/07/2019 3:29:17 AM] "CategoryName": null,

[9/07/2019 3:29:17 AM] "LogLevel": "None",

[9/07/2019 3:29:17 AM] "Filter": null

[9/07/2019 3:29:17 AM] },

[9/07/2019 3:29:17 AM] {

[9/07/2019 3:29:17 AM] "ProviderName": "Microsoft.Azure.WebJobs.Script.WebHost.Diagnostics.SystemLoggerProvider",

[9/07/2019 3:29:17 AM] "CategoryName": null,

[9/07/2019 3:29:17 AM] "LogLevel": null,

[9/07/2019 3:29:17 AM] "Filter": "<AddFilter>b__0"

[9/07/2019 3:29:17 AM] }

[9/07/2019 3:29:17 AM] ]

[9/07/2019 3:29:17 AM] }

[9/07/2019 3:29:17 AM] FunctionResultAggregatorOptions

[9/07/2019 3:29:17 AM] {

[9/07/2019 3:29:17 AM] "BatchSize": 1000,

[9/07/2019 3:29:17 AM] "FlushTimeout": "00:00:30",

[9/07/2019 3:29:17 AM] "IsEnabled": true

[9/07/2019 3:29:17 AM] }

[9/07/2019 3:29:17 AM] SingletonOptions

[9/07/2019 3:29:17 AM] {

[9/07/2019 3:29:17 AM] "LockPeriod": "00:00:15",

[9/07/2019 3:29:17 AM] "ListenerLockPeriod": "00:00:15",

[9/07/2019 3:29:17 AM] "LockAcquisitionTimeout": "10675199.02:48:05.4775807",

[9/07/2019 3:29:17 AM] "LockAcquisitionPollingInterval": "00:00:05",

[9/07/2019 3:29:17 AM] "ListenerLockRecoveryPollingInterval": "00:01:00"

[9/07/2019 3:29:17 AM] }

[9/07/2019 3:29:17 AM] Starting JobHost

[9/07/2019 3:29:17 AM] Starting Host (HostId=desktopkghqug8-1671102379, InstanceId=015cba37-1f46-41a1-b3c1-19f341c4d3d9, Version=2.0.12507.0, ProcessId=18728, AppDomainId=1, InDebugMode=False, InDiagnosticMode=False, FunctionsExtensionVersion=)

[9/07/2019 3:29:17 AM] Loading functions metadata

[9/07/2019 3:29:17 AM] 3 functions loaded

[9/07/2019 3:29:17 AM] Generating 3 job function(s)

[9/07/2019 3:29:17 AM] Found the following functions:

[9/07/2019 3:29:17 AM] DurableDemos.Function1.SayHello

[9/07/2019 3:29:17 AM] DurableDemos.Function1.HttpStart

[9/07/2019 3:29:17 AM] DurableDemos.Function1.RunOrchestrator

[9/07/2019 3:29:17 AM]

[9/07/2019 3:29:17 AM] Host initialized (221ms)

[9/07/2019 3:29:18 AM] Starting task hub worker. InstanceId: . Function: . HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 1.

[9/07/2019 3:29:18 AM] Host started (655ms)

[9/07/2019 3:29:18 AM] Job host started

Hosting environment: Production

Content root path: C:\Users\Admin\OneDrive\Documents\dct\19\DontCodeTiredDemosV2\DontCodeTiredDemosV2\DurableDemos\bin\Debug\netcoreapp2.1

Now listening on: http://0.0.0.0:7071

Application started. Press Ctrl+C to shut down.

Http Functions:

ClientFunction: [GET,POST] http://localhost:7071/api/ClientFunction

[9/07/2019 3:29:23 AM] Host lock lease acquired by instance ID '000000000000000000000000E72C9561'.

Now to start an instance of the workflow, the following PowerShell can be used:

$R = Invoke-WebRequest 'http://localhost:7071/api/ClientFunction' -Method 'POST'

This will result in the following rather verbose output:

[9/07/2019 3:30:55 AM] Executing HTTP request: {

[9/07/2019 3:30:55 AM] "requestId": "36d9f77f-1ceb-43ec-aa1d-5702b42a8e15",

[9/07/2019 3:30:55 AM] "method": "POST",

[9/07/2019 3:30:55 AM] "uri": "/api/ClientFunction"

[9/07/2019 3:30:55 AM] }

[9/07/2019 3:30:55 AM] Executing 'ClientFunction' (Reason='This function was programmatically called via the host APIs.', Id=a014a4ae-77ff-46c7-b812-344bd442da38)

[9/07/2019 3:30:55 AM] f5a38610c07a4c90815f2936451628b8: Function 'OrchestratorFunction (Orchestrator)' scheduled. Reason: NewInstance. IsReplay: False. State: Scheduled. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 2.

[9/07/2019 3:30:56 AM] Started orchestration with ID = 'f5a38610c07a4c90815f2936451628b8'.

[9/07/2019 3:30:56 AM] Executed 'ClientFunction' (Succeeded, Id=a014a4ae-77ff-46c7-b812-344bd442da38)

[9/07/2019 3:30:56 AM] Executing 'OrchestratorFunction' (Reason='', Id=d6263f09-2372-4bfb-9473-70f03874cfee)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'OrchestratorFunction (Orchestrator)' started. IsReplay: False. Input: (16 bytes). State: Started. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 3.

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' scheduled. Reason: OrchestratorFunction. IsReplay: False. State: Scheduled. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 4.

[9/07/2019 3:30:56 AM] Executed 'OrchestratorFunction' (Succeeded, Id=d6263f09-2372-4bfb-9473-70f03874cfee)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'OrchestratorFunction (Orchestrator)' awaited. IsReplay: False. State: Awaited. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 5.

[9/07/2019 3:30:56 AM] Executed HTTP request: {

[9/07/2019 3:30:56 AM] "requestId": "36d9f77f-1ceb-43ec-aa1d-5702b42a8e15",

[9/07/2019 3:30:56 AM] "method": "POST",

[9/07/2019 3:30:56 AM] "uri": "/api/ClientFunction",

[9/07/2019 3:30:56 AM] "identities": [

[9/07/2019 3:30:56 AM] {

[9/07/2019 3:30:56 AM] "type": "WebJobsAuthLevel",

[9/07/2019 3:30:56 AM] "level": "Admin"

[9/07/2019 3:30:56 AM] }

[9/07/2019 3:30:56 AM] ],

[9/07/2019 3:30:56 AM] "status": 202,

[9/07/2019 3:30:56 AM] "duration": 699

[9/07/2019 3:30:56 AM] }

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' started. IsReplay: False. Input: (36 bytes). State: Started. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 6.

[9/07/2019 3:30:56 AM] Executing 'ActivityFunction' (Reason='', Id=d35e9667-f77b-4328-aff9-4ecbc3b66e89)

[9/07/2019 3:30:56 AM] Saying hello to Tokyo.

[9/07/2019 3:30:56 AM] Executed 'ActivityFunction' (Succeeded, Id=d35e9667-f77b-4328-aff9-4ecbc3b66e89)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' completed. ContinuedAsNew: False. IsReplay: False. Output: (56 bytes). State: Completed. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 7.

[9/07/2019 3:30:56 AM] Executing 'OrchestratorFunction' (Reason='', Id=5cc451d2-dd5b-4cb5-b10a-02e7bca71a08)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' scheduled. Reason: OrchestratorFunction. IsReplay: False. State: Scheduled. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 8.

[9/07/2019 3:30:56 AM] Executed 'OrchestratorFunction' (Succeeded, Id=5cc451d2-dd5b-4cb5-b10a-02e7bca71a08)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'OrchestratorFunction (Orchestrator)' awaited. IsReplay: False. State: Awaited. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 9.

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' started. IsReplay: False. Input: (44 bytes). State: Started. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 10.

[9/07/2019 3:30:56 AM] Executing 'ActivityFunction' (Reason='', Id=719c797b-9ee1-4167-972c-c0b0c4dd886c)

[9/07/2019 3:30:56 AM] Saying hello to Seattle.

[9/07/2019 3:30:56 AM] Executed 'ActivityFunction' (Succeeded, Id=719c797b-9ee1-4167-972c-c0b0c4dd886c)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' completed. ContinuedAsNew: False. IsReplay: False. Output: (64 bytes). State: Completed. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 11.

[9/07/2019 3:30:56 AM] Executing 'OrchestratorFunction' (Reason='', Id=0b115432-1d9d-43af-b5da-3e3607b808ac)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' scheduled. Reason: OrchestratorFunction. IsReplay: False. State: Scheduled. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 12.

[9/07/2019 3:30:56 AM] Executed 'OrchestratorFunction' (Succeeded, Id=0b115432-1d9d-43af-b5da-3e3607b808ac)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'OrchestratorFunction (Orchestrator)' awaited. IsReplay: False. State: Awaited. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 13.

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' started. IsReplay: False. Input: (40 bytes). State: Started. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 14.

[9/07/2019 3:30:56 AM] Executing 'ActivityFunction' (Reason='', Id=2cbd8e65-3e1d-4fbb-8d92-afe4b7e6a012)

[9/07/2019 3:30:56 AM] Saying hello to London.

[9/07/2019 3:30:56 AM] Executed 'ActivityFunction' (Succeeded, Id=2cbd8e65-3e1d-4fbb-8d92-afe4b7e6a012)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'ActivityFunction (Activity)' completed. ContinuedAsNew: False. IsReplay: False. Output: (60 bytes). State: Completed. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 15.

[9/07/2019 3:30:56 AM] Executing 'OrchestratorFunction' (Reason='', Id=10215167-9de6-4197-bc65-1d819b8471cb)

[9/07/2019 3:30:56 AM] f5a38610c07a4c90815f2936451628b8: Function 'OrchestratorFunction (Orchestrator)' completed. ContinuedAsNew: False. IsReplay: False. Output: (196 bytes). State: Completed. HubName: DurableFunctionsHub. AppName: . SlotName: . ExtensionVersion: 1.8.2. SequenceNumber: 16.

[9/07/2019 3:30:56 AM] Executed 'OrchestratorFunction' (Succeeded, Id=10215167-9de6-4197-bc65-1d819b8471cb)

A few key things to notice:

Executing 'ClientFunction' – this is PowerShell calling the HTTP trigger function.

Started orchestration with ID = 'f5a38610c07a4c90815f2936451628b8' – the HTTP client function has started an instance of the orchestration.

And 3:Executing 'ActivityFunction'… - the 3 activity calls defined in the orchestrator function.

If we modify the "ActivityFunction" to introduce a simulated processing time:

[FunctionName("ActivityFunction")]

public static string SayHello([ActivityTrigger] string name, ILogger log)

{

Thread.Sleep(5000); // simulate longer processing delay

log.LogInformation($"Saying hello to {name}.");

return $"Hello {name}!";

}

And now run the project again, and once again make the request from PowerShell, the client function returns a result to PowerShell “immediately”:

StatusCode : 202

StatusDescription : Accepted

Content : {"id":"5ed815a8fe3d497993266d49213a7c09","statusQueryGetUri":"http://localhost:7071/runtime/webhook

s/durabletask/instances/5ed815a8fe3d497993266d49213a7c09?taskHub=DurableFunctionsHub&connection=Sto

ra...

RawContent : HTTP/1.1 202 Accepted

Retry-After: 10

Content-Length: 1232

Content-Type: application/json; charset=utf-8

Date: Tue, 09 Jul 2019 03:38:46 GMT

Location: http://localhost:7071/runtime/webhooks/durab...

Forms : {}

Headers : {[Retry-After, 10], [Content-Length, 1232], [Content-Type, application/json; charset=utf-8],

[Date, Tue, 09 Jul 2019 03:38:46 GMT]...}

Images : {}

InputFields : {}

Links : {}

ParsedHtml : mshtml.HTMLDocumentClass

RawContentLength : 1232

So even though the HTTP request is completed (with a 202 Accepted HTTP code), the orchestration is still running.

Later in this series of articles we’ll learn more and dig into more detail about what is going on behind the scenes.

If you want to fill in the gaps in your C# knowledge be sure to check out my C# Tips and Traps training course from Pluralsight – get started with a free trial.

SHARE: