Document databases are a form of NoSQL database that store items (objects) as single documents rather than potentially splitting the data among multiple relational database tables.

Marten is a .NET library that enables the storing, loading, deleting, and querying of documents. Objects in the application can be stored into the document database and retrieved back as an object from the database. In this approach there is no requirement for the additional “plumbing code” of ORMs.

Marten is not a database itself but rather a library that interacts with the (advanced JSON features) of the open source, cross platform PostgreSQL database.

Once the Marten NuGet package is installed there are a number of steps to storing .NET objects as documents.

First the “document” to be stored is defined. At the most basic level this is a class that has a field or property that represents the document identity as stored in the database. There are a number of ways to define identity, one of which is to follow the naming convention “Id” as the following class shows:

class Customer

{

public int Id { get; set; }

public string Name { get; set; }

public List<Address> Addresses { get; set; } = new List<Address>();

}

internal class Address

{

public string Line1 { get; set; }

public string Line2 { get; set; }

public string Line3 { get; set; }

}

Notice in the preceding code that the Address class does not have an Id. This is because the address information will be storing in the overall Customer document, rather than for example in a relational database as rows in a separate Address table.

To work with the database a document store instance is required, this can be created with additional configuration or with the simple code shown below:

var connectionString = "host = localhost; database = CustomerDb; password = YOURPASSWORD; username = postgres";

var store = DocumentStore.For(connectionString);

Working with documents happens by way of a session instance, there are a number of types/configurations of sessions available.

To create a new customer object and store it the following code could be used:

// Store document (& auto-generate Id)

using (var session = store.LightweightSession())

{

var customer = new Customer

{

Name = "Arnold",

Addresses =

{

new Address {Line1="Address 1 Line 1", Line2="Address 1 Line 2",Line3="Address 1 Line 3"},

new Address {Line1="Address 2 Line 1", Line2="Address 2 Line 2",Line3="Address 2 Line 3"}

}

};

// Add customer to session

session.Store(customer);

// Persist changes to the database

session.SaveChanges();

}

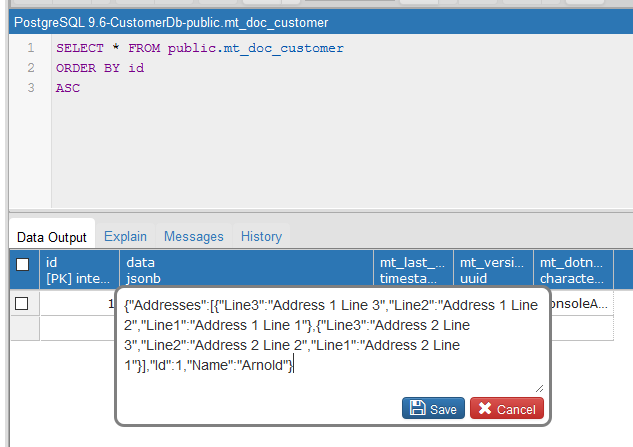

Once the above code executes, the customer will be stored in a PostgreSQL table called “mt_doc_customer”. The Customer object will be serialized to JSON and stored in a special JSONB field in PostgreSQL. The JSON data also contains all the addresses.

{

"Addresses": [

{

"Line3": "Address 1 Line 3",

"Line2": "Address 1 Line 2",

"Line1": "Address 1 Line 1"

},

{

"Line3": "Address 2 Line 3",

"Line2": "Address 2 Line 2",

"Line1": "Address 2 Line 1"

}

],

"Id": 1,

"Name": "Arnold"

}

There are a number of ways to retrieve documents and convert them back into .NET objects. One method is to use the Marten LINQ support as the following code shows:

// Retrieve by query

using (var session = store.QuerySession())

{

var customer = session.Query<Customer>().Single(x => x.Name == "Arnold");

Console.WriteLine(customer.Id);

Console.WriteLine(customer.Name);

}

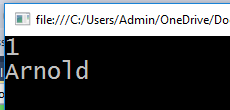

When executed, this code displays the retrieved customer details as the following screenshot shows:

To learn more about the document database features of Marten check out my Pluralsight courses: Getting Started with .NET Document Databases Using Marten and Working with Data and Schemas in Marten.

You can start watching with a Pluralsight free trial.

SHARE: